3D Human Pose Estimation¶

Estimation of human joint coordinates from an image is a hard problem to solve with classic computational methods. As is typical with many problems in computer vision, the current best approach is to use deep neural networks to learn the correct representation. The robot uses the method developed in "3D Human Pose Estimation in Video with Temporal Convolutions and Semi-Supervised Training" [1] to generate the cordinates of each joint in 3D space. In this section the paper is briefly reviewed, along with the necessary modifications made to the original code that was released with this paper.

Paper Summary¶

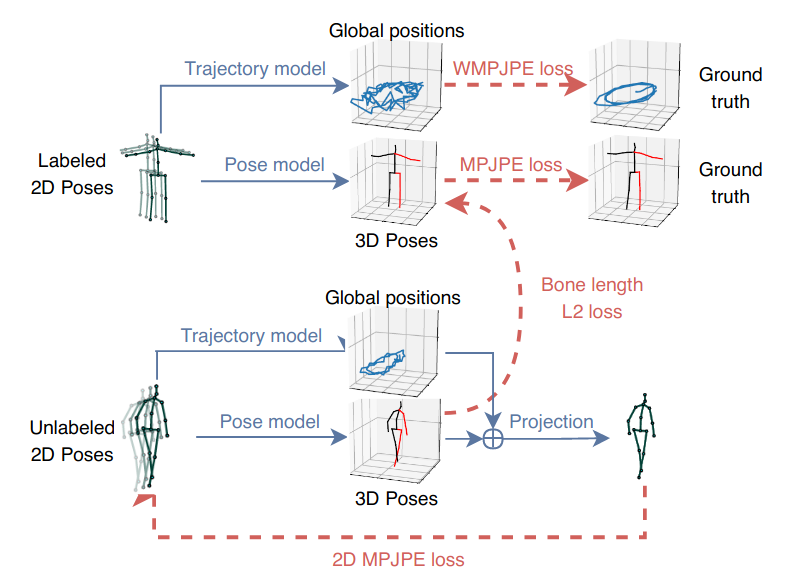

A common practice for 3D pose detection is to first estimate a 2D skeleton from an image, and then to use this representation to predict the final 3D coordinates. While this method has the issue that than mutiple 3D skeleton frames could be cast to the same 2D model, in practice it achieves state of the art performance. Given this, the paper uses the pre-trained Detectron2 [2] network developed by Facebook AI to perform the initial 2D skeleton frame detection from each image in a video stream.

Next, a neural network that can predict the 3D pose from the output of the Detectron2 model is required. The authors opt to use a fully convolutional method that performs temporal convolutions on a series of past predictions from the Detectron2 model. This is motivated by the assumption that past frames can give additional information about how to generate the 3D model. For a real time implimentation, it is important that causal convolutions are used, meaning that the network is trained and run by using only information in the past. The difference between the two types of convolutions can be seen in Figure 1 below. The benefit of using a fully convolutional method like this is that the computation is fast, and efficently scales to consider exponentially many frames in the past, with just a linear increase in network parameters.